Non-functional Requirements

5 minute read

Note

This feature is currently in beta and only available via our command line tools.Non-functional Requirements allow you to check if certain criteria match. For example a request should respond within 500ms or with a specific HTTP status code. After defining your requirements within a YAML file, the forge CLI can be used to check it against one of your existing test runs.

Definition

You can define formal, non-functional requirements using YAML. Note that this is a separate file from your test case definition. Here is a short example:

version: "0.1"

requirements:

# require overall error ratio to be below 0.5%

- http.error_ratio:

test: ["<", 0.005]

# require overall 99th latency percentile to be below 1.5 sec

- http.latency:

select:

type: percentile

value: 99.0

test: ["<=", 1500]

You can use this definition to check finished test runs against these requirements.

Usage

Launch a test case and verify it

stormforge create test-run --test-case <testcase-ref> --nfr-check-file <nfr-file.yaml>forge test-case launch <testcase-ref> --nfr-check-file <nfr-file.yaml>This will launch the specified test case, wait for it to finish and afterward perform an NFR check on the result. The NFR check will exit the command with a non-zero exit code, if any checks fails.

On an previously executed test run

You can use the CLI to check your defined requirements against an existing test-run:

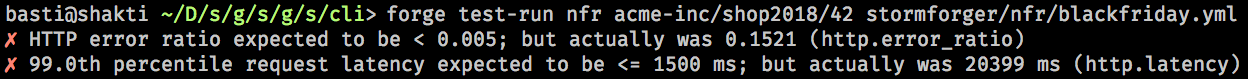

stormforge check nfr --test-run <test-run-ref> --from-file <nfr-file.yaml>forge test-run nfr <test-run-ref> <nfr-file.yaml>The output lists the results for each check and whether it succeeded or not.

The exit code of the nfr command reflects whether all checks succeeded or any failed (non-zero exit code).

This allows for easy integration as quality-gates within CI/CD pipelines.

Example: forge test-run nfr acme-inc/shop2018/42 nfr/blackfriday.yml.

Requirement Definition

We currently support the following requirement types:

test.completed, to check if your test was completed (and not aborted)test.duration, to check the test durationtest.cluster.utilization.cpu, to check the test cluster utilizationchecks, to assert your checkshttp.error_ratio, to check for HTTP error ratios (status >= 400)http.status_code, to check for HTTP status codeshttp.latency, to check for HTTP request latencieshttp.apdex, to check for Apdex Scores

Every requirement has up to three parts:

- Select metric (

select) - Filter subject (

where) - Test to be performed (

test)

- http.latency:

select:

type: percentile

value: 99.0

where:

status: ["<", 400]

tag:

- checkout

- register

test: ["<", 1500]

Select Metric

With select you can specify what metric or aggregation you are interested in.

In this example we are interested in the 99th percentile.

Filter Subject

With where you can specify filter for example to select specific requests.

In the example above, we want to have requests, which status code is below 400 and request tag is checkout or register; Think SELECT percentile(duration, 99) FROM http.latency WHERE status < 400 AND tag IN('checkout', 'register').

Test

With test you can specify the test criteria that should be applied to the subject.

In the example we want the subject to be below 1500 ms (subject being 99th percentile of requests).

Currently you have to specify the test in form of ["$operator", "$value"]. Operator can be =, !=, <, >= or <=.

Long Example

version: "0.1"

requirements:

# Check if the test was completed and not aborted

- test.completed: true

# Require that the test cluster CPU was not under too high load

- test.cluster.utilization.cpu:

select: average

test: ["<=", 60]

# Checks

# =============================================================================

# make sure that we have have executed exactly 1000 checks

- checks:

select: total

test: 1000

# allow up to 100 KOs (failed checks) overall

- checks:

# possible: 'ok', 'ko', 'success_rate' or 'total'

select: ko

test: ["<=", 100]

# require success rate of over 95% for

# 'checkout_step_1' checks

- checks:

select: success_rate

where:

name: checkout_step_1

test: [">", 0.95]

# HTTP Status & Errors

# =============================================================================

# require overall error ratio to be below 0.5%

- http.error_ratio:

test: ["<", 0.005]

# require http error rate of requests tagged with 'checkout_step_1' or

# 'checkout_step_2' to be at most at 1%.

- http.error_ratio:

where:

tag:

- checkout_step_1

- checkout_step_2

test: ["<=", 0.01] # <= 1%

# require all requests tagged 'checkout_step_1' to be HTTP 302 redirects.

- http.status_code:

select:

type: ratio

value: 302

where:

tag: checkout_step_1

test: ["=", 1]

# require almost all requests tagged 'register' or 'checkout' to be HTTP 301

# redirects.

- http.status_code:

select:

type: ratio

value: 301

where:

tag:

- register

- checkout

test: [">=", 0.99]

# require HTTP status code 504 (Gateway Timeout) overall to be under 0.1%.

- http.status_code:

select:

type: ratio

value: 504

test: ["<", 0.001]

# HTTP Latency

# =============================================================================

# require the overall average to be below 1 sec

- http.latency:

select: average

test: ["<", 1000]

# require overall 99th latency percentile to be below 1.5 sec

- http.latency:

select:

type: percentile

value: 99.0

test: ["<=", 1500]

# require 95th latency percentile of 'checkout' to be below 5 sec

- http.latency:

select:

type: percentile

value: 95.0

where:

tag: checkout

test: ["<", 5000]

# require the 99th percentile of requests with status code below 400 and tag

# 'checkout' or 'register' to be below 1500 ms.

- http.latency:

select:

type: percentile

value: 99.0

where:

status: ["<", 400]

tag:

- checkout

- register

test: ["<", 1500]

# HTTP Apdex Score

# =============================================================================

# require overall Apdex (750ms) to be at least 0.5

- http.apdex:

select:

value: 750

test: [">=", 0.5]

# require Apdex (750ms) score of requests tagged with 'search' to at least 0.8.

- http.apdex:

select:

value: 750

where:

tag: search

test: [">=", 0.8]