Testing with GitLab CI

6 minute read

Please note

The content on this page pertains only to the new Platform StormForge Performance Testing environment at https://app.stormforge.io/perftest.

Similar integrations with GitLab can be setup using the forge CLI with the Standalone Performance Testing environment.

This guide shows an example GitLab CI/CD workflow utilizing StormForge Performance Testing to perform automated load tests after each deployment.

We also setup a scheduled load tests (e.g. once a week) against your application. A validation run is performed against the staging environment for every push to ensure the load test definition is up to date.

For this guide we assume you have general knowledge of GitLab CI/CD and how it works. You also need the permissions to configure variables in your repository or organisation.

The code is available on GitHub at thestormforge/perftest-example-gitlab-ci. Our example service is written in Go, but you don’t need to know Go, as we will only discuss the Performance Testing related steps.

Note that this is just an example and your actual development workflow may differ. Please take this as an inspiration how to use Performance Testing with GitLab CI/CD.

Preparation

Please follow the Getting Started with the StormForge CLI guide to create the authentication secrets and configure them in your repository.

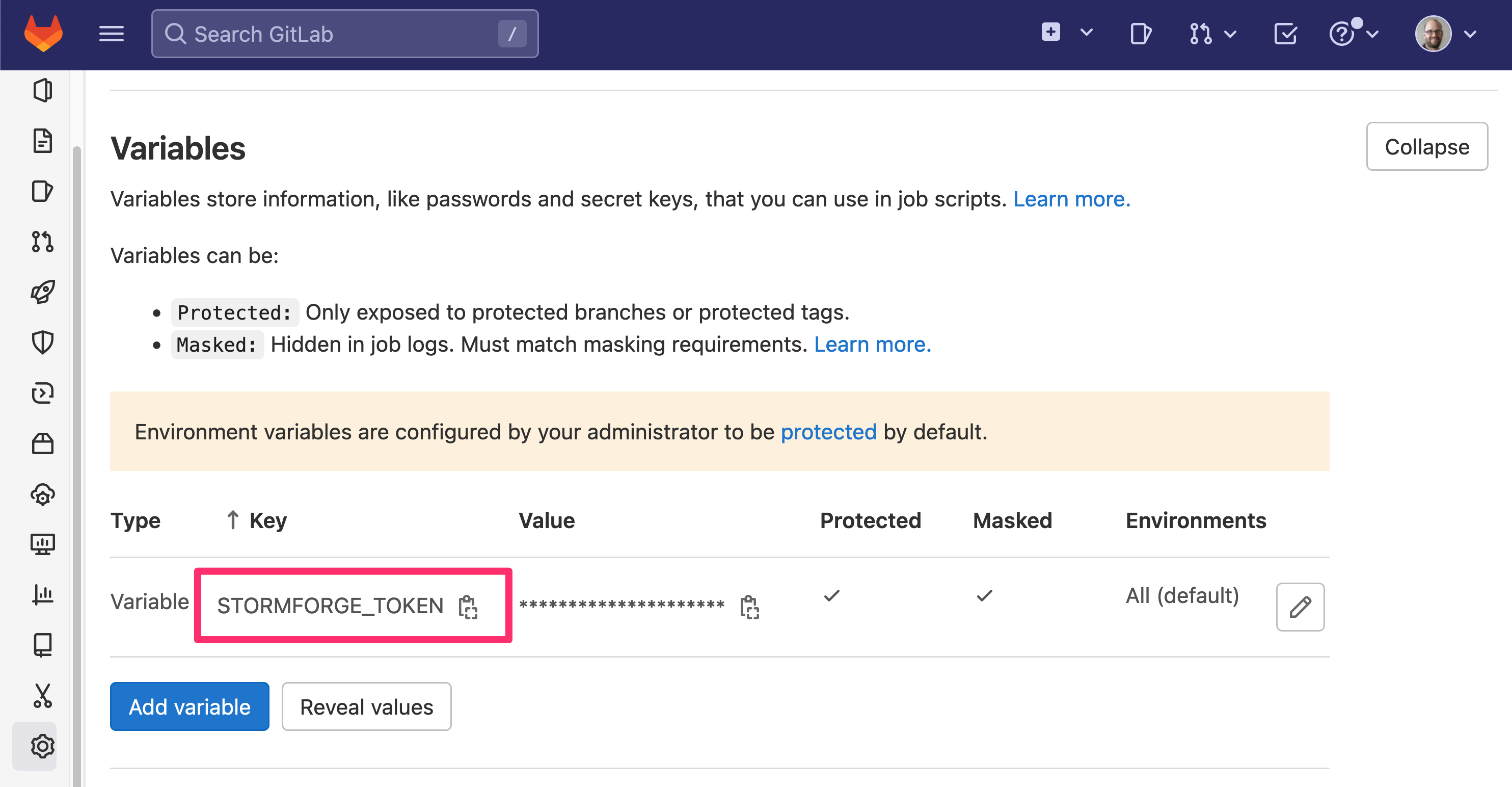

Follow the steps described in GitLab’s Creating variables in the UI guide. Since this is a secret, we recommend ensuring it is masked and protected. It should look like this when you are done:

Note

You can also configure secrets on the group level. If you have multiple repositories using StormForge Performance Testing, this might be easier to manage.Inside the workflow these secrets can now be referenced as ${STORMFORGE_TOKEN}, but you don’t need to reference them directly.

Our CLI will pick them up automatically.

Steps

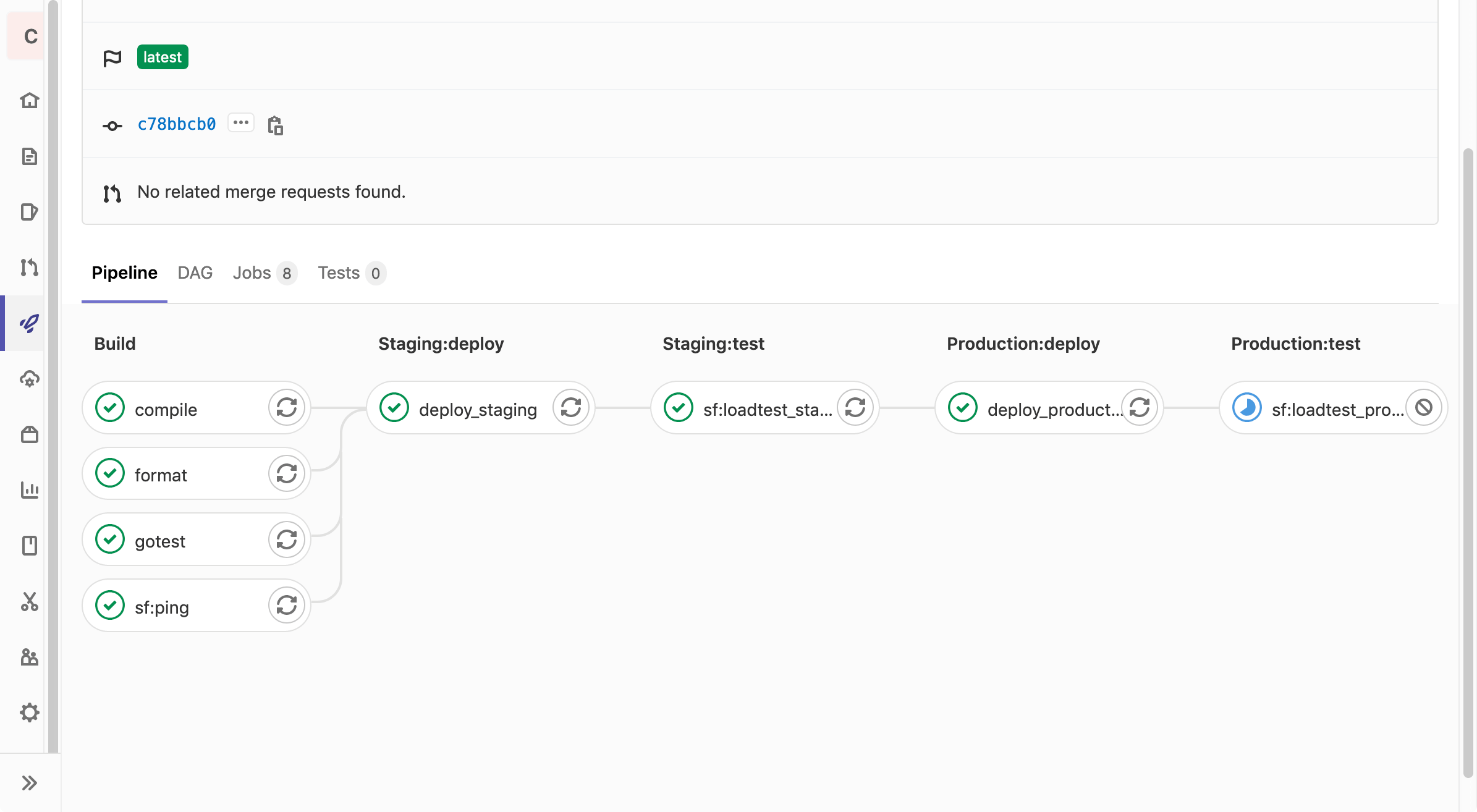

Our workflow file .gitlab-ci.yml consists of five stages: build, staging:deploy, staging:test, production:deploy and production:test.

Everything except the production:* stages are executed on every commit.

Our goal is to run the load test in validation mode against the staging target in the staging:test job and against the production environment in the production:test job.

For both environments we follow the same steps:

- Manage Data Sources

- Build the Test Run and Launch It

There is also a ping step in the build stage to check if the stormforge cli can communicate correctly with the API.

Let’s checkout the ping job first, then go through each step one by one.

Ping with the stormforge CLI

As a first step, we check that the stormforge CLI works and successfully talks with the StormForge API:

.stormforge:

image:

name: registry.stormforge.io/library/stormforge-cli:latest

entrypoint: [""]

sf:ping:

stage: build

extends: .stormforge

script:

- stormforge ping # check that we can reach the API and our token is correct

Here we define a template job .stormforge that we use in the sf:ping job.

Since we will reuse the docker image definition, this avoids having to copy the same image definition multiple times.

The thestormforge/cli:latest is the docker image that is released as part of our CLI.

You can also copy this image to your private registry, but make sure to update it regularly.

The actual work is in the stormforge ping command: This performs an authorized ping against the StormForge API and verifies that the authentication secrets are valid and usable.

Manage Data Sources

Data Sources allow a Test Run to pick random data out of a predefined pool, e.g. a product out of all the available inventory. If you don’t use data sources, you can skip this step.

In our workflow we use the script ./scripts/data-source.sh to generate a CSV file for our test, but this can be easily changed or extended to download the latest inventory data from a database.

./scripts/data-source.sh "${TARGET_ENV}"

stormforge create data-source \

--from-file *.csv \

--name-prefix-path="${SF_DS_PREFIX}/${TARGET_ENV}/" \

--auto-field-names

We prefix all uploaded CSV files with our repository name and the target environment to make them easily distinguishable in the data source management of the Performance Testing application.

This step is the same for both the staging and production environment, except for the value of the TARGET_ENV variable.

Build the Test Run and Launch It

Note

Heads Up! Testing in production can be dangerous. Make sure the configured arrival rate/load in your Test Case repo is low enough so you don’t trigger any incidents. The goal here is not to run stress tests regularly but to ensure we do not experience any major performance regression with nominal load.As the last step we launch the Test Run.

Here we pass in the environment via --define ENV=\"name\":

stormforge create test-run --test-case "${TESTCASE}" --from-file="loadtest/loadtest.mjs" \

--define ENV=\"${TARGET_ENV}\" \

--title="${TITLE}" \

--notes="${NOTES}" \

--label="git-ref=${CI_COMMIT_REF_NAME}" \

--label="gitlab-project=${CI_PROJECT_URL}" \

--label="gitlab-commit=${CI_COMMIT_SHA}" \

--label="gitlab-job=${CI_JOB_STAGE} ${CI_JOB_NAME}" \

--label="giblab-job-url=${CI_JOB_URL}" \

--label="gitlab-actor=${GITLAB_USER_LOGIN}" \

${LAUNCH_ARGS}

This allows us to modify the target system urls or just specify global variables in the loadtest.mjs file:

var config;

if (ENV == "production") {

config = {

dsPrefix: "example-github-actions/production/",

host: "https://testapp.loadtest.party",

}; // production config

} else if (ENV == "staging") {

config = { ... }; // staging config

} else {

throw new Exception("unknown environment");

}

Note

To learn more about defining variables or loading modules checkoutstormforge create test-case --help.

The Combined GitLab Job

Combined into a job, the scripts and steps look like this in our .gitlab-ci.yml.

sf:loadtest_staging:

stage: staging:test

except: ["schedules"]

resource_group: staging

extends: .forge

script:

- ./scripts/data-source.sh "${TARGET_ENV}"

- |

stormforge create data-source \

--from-file *.csv \

--name-prefix-path="${SF_DS_PREFIX}/${TARGET_ENV}/" \

--auto-field-names

- |

stormforge create test-run --test-case "${TESTCASE}" --from-file="loadtest/loadtest.mjs" \

--define ENV=\"${TARGET_ENV}\" \

--title="${TITLE}" --notes="${NOTES}" \

--label="git-ref=${CI_COMMIT_REF_NAME}" \

--label="gitlab-project=${CI_PROJECT_URL}" \

--label="gitlab-commit=${CI_COMMIT_SHA}" \

--label="gitlab-job=${CI_JOB_STAGE} ${CI_JOB_NAME}" \

--label="giblab-job-url=${CI_JOB_URL}" \

--label="gitlab-actor=${GITLAB_USER_LOGIN}" \

${LAUNCH_ARGS}

variables:

TARGET_ENV: "${TARGET_ENV_STAGING}"

LAUNCH_ARGS: "--validate"

NOTES: |

Message:

${CI_COMMIT_MESSAGE}

TITLE: "#${CI_CONCURRENT_ID} (${CI_COMMIT_SHORT_SHA})"

TESTCASE: "perftest-example-gitlab-ci-${TARGET_ENV_STAGING}"

In this step we attach a lot of information to the Test Launch.

The --notes, --title and --label flags provide metadata to enrich the Test Run report.

With LAUNCH_ARGS: "--validate" we are launching the test run only in validation mode for our staging environment.

For the production environment we are instead passing in LAUNCH_ARGS: "--nfr-check-file=./loadtest/loadtest.nfr.yaml" which performs the Non-Functional Requirement checks after the test run has finished.

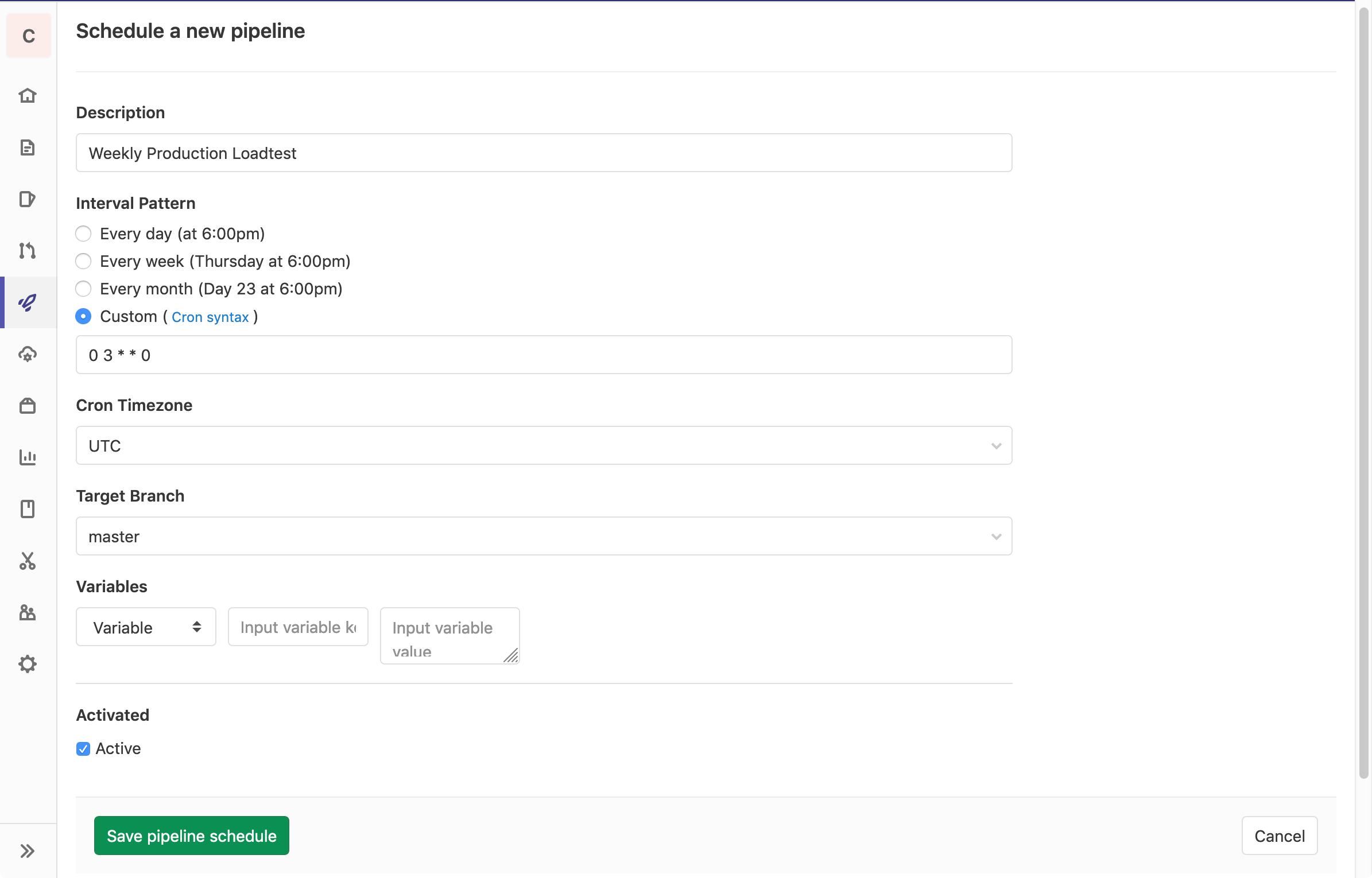

Scheduled Test-Run Execution

Finally, we want to run the load test once a week automatically via GitLabs pipeline schedules.

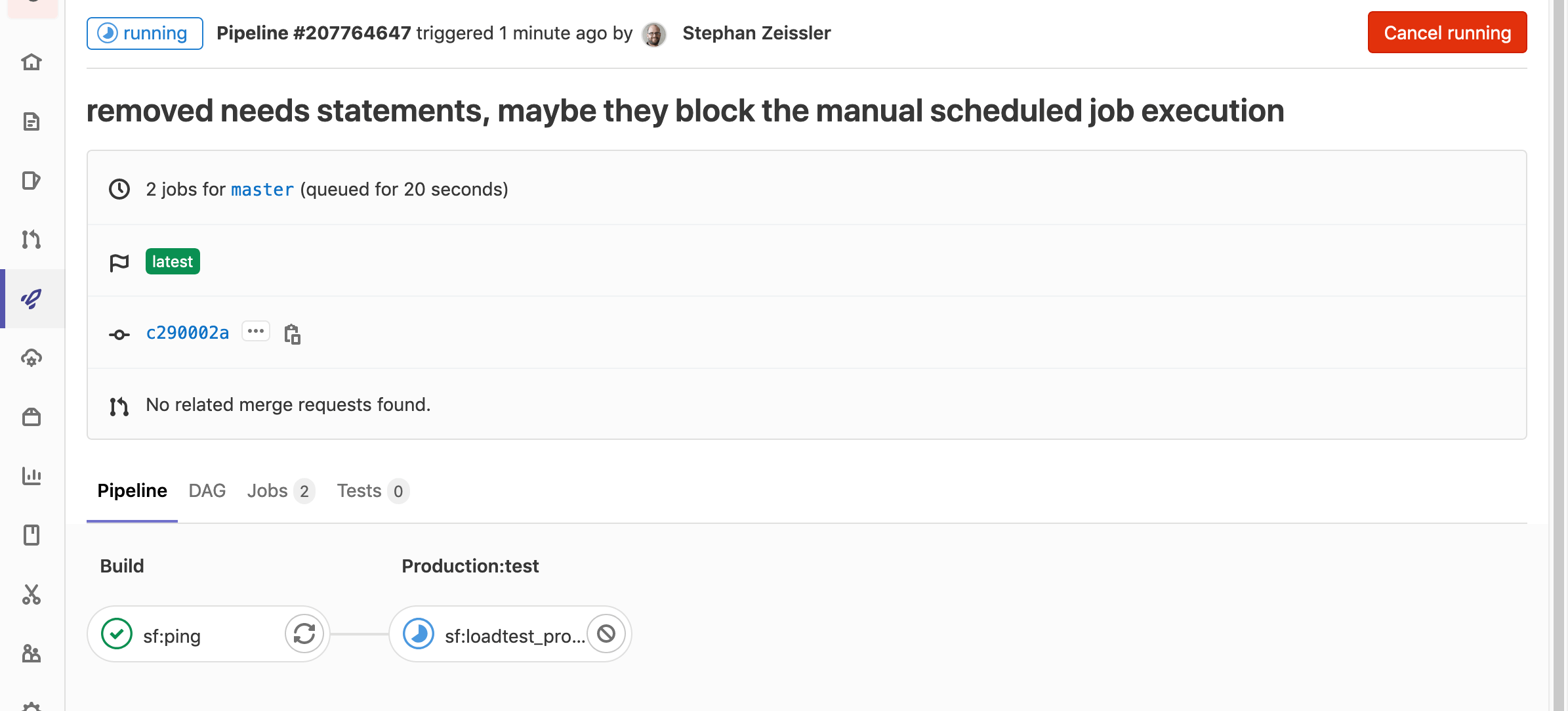

Since we don’t want to run through all phases (build, test, deploy) nor redeploy to production for this, we use the except statement to disable all jobs.

The sf:loadtest_production job gets no except: statement, as this is the job we want to actually execute by the scheduled execution.

For the deploy_staging job, this looks like this:

deploy_staging:

stage: staging:deploy

except: ["schedules"]

You can hit the “Play” button after saving the scheduled pipeline to perform a test execution:

This pipeline will now execute every Sunday night!

Summary

To summarise, we used GitLab CI/CD to automatically upload data sources and launch a Test Run for every environment in our development cycle. A weekly job verifies that the load test continuously works and no other factors introduce regressions. By using NFR checks, we automatically verify that our non-functional requirements are fulfilled.